How to Schedule a Pipeline in Snowflake

Snowflake offers an effective method for automating and scheduling data pipelines through the use of Tasks. These tasks enable the scheduling of SQL queries, including the “COPY INTO” command, to execute at specified intervals or according to a dependency-driven sequence. This guide will outline the steps necessary to establish and schedule a pipeline in Snowflake for the purpose of automating data ingestion.

What Are Snowflake Tasks?

Snowflake Tasks enable you to:

- Schedule SQL queries to run periodically (e.g., every 5 minutes, hourly, or daily).

- Chain tasks together to create dependent workflows.

- Automate processes like data ingestion, transformation, and cleanup.

Steps to Schedule a Pipeline

Before creating a task, ensure the following are in place:

Stage-> An external or internal stage (e.g., `@aws_stage_pilot24`) with access to your source data.

Target Table-> A Snowflake table (e.g., `plant_details`) to load the data into.

File Format-> A file format CSV that matches your data structure.

COPY INTO Command-> A tested “COPY INTO” SQL statement for loading

Please refer to the previous blog for additional information.

Snowflake Meets AWS S3: A Complete Walkthrough for Data Loading

Automating Data Load Every Hour

Here’s an example of setting up a task to load data from an AWS S3 bucket into the `plant_details` table every hour:

- Define the ‘COPY INTO’Command:

COPY INTO plant_details FROM @aws_stage_pilot24 ON_ERROR = ‘SKIP_FILE’;

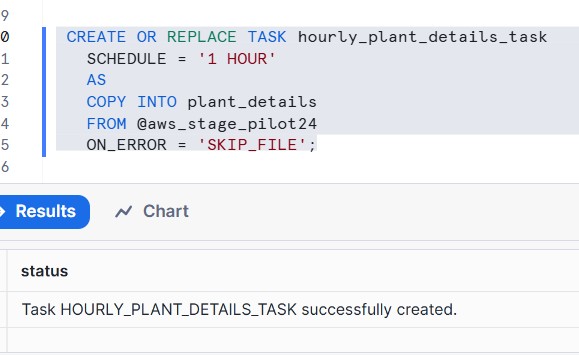

- Create the Task:

CREATE OR REPLACE TASK hourly_plant_details_task SCHEDULE = ‘1 HOUR’ AS COPY INTO plant_details FROM @aws_stage_pilot24 ON_ERROR = ‘SKIP_FILE’;

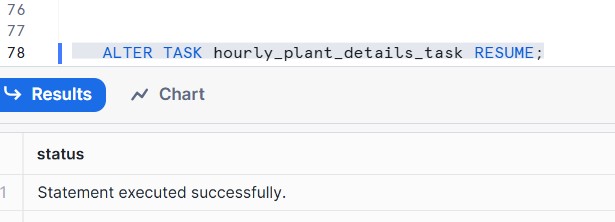

- Enable the Task:

ALTER TASK hourly_plant_details_task RESUME;

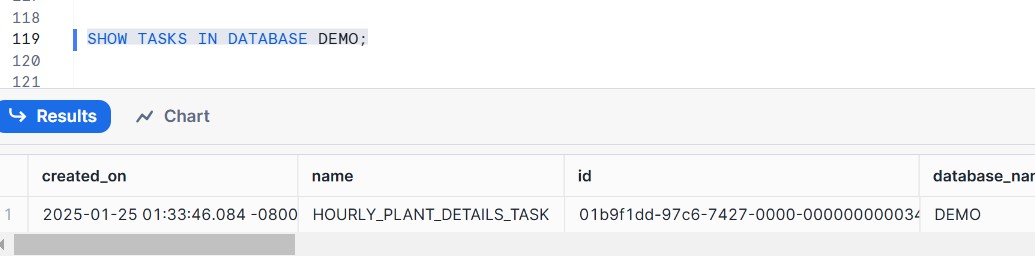

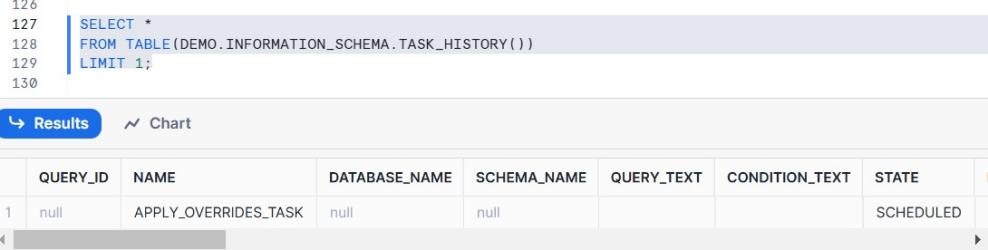

4. Monitor the Task:

SHOW TASKS IN DATABASE DEMO;

SELECT * FROM TABLE (DEMO.INFORMATION_SCHEMA.TASK_HISTORY()) LIMIT 1;

Benefits of Scheduling Pipelines in Snowflake

Automation-> Eliminates manual intervention by automating recurring tasks.

Error Handling-> The `ON_ERROR` parameter allows you to gracefully handle issues without stopping the pipeline.

Workflow Management-> Tasks can be chained together to build complex workflows.

Flexibility-> Schedules can be easily adjusted to meet changing requirements.

Conclusion

Utilizing Snowflake Tasks allows for the effective scheduling and automation of data pipelines. This includes the ability to ingest data at specified intervals or to link tasks for the development of intricate workflows, with Snowflake offering the necessary tools for optimized data management. Adhere to the outlined steps to establish your scheduled pipeline and fully harness the automation features of Snowflake.