Introduction

After creating db tables in Snowflake, the next step is to load data into them. Snowflake supports various methods to ingest data from local files, cloud storage, or external sources.

Overview of Data Loading Methods

Snowflake allows to load data using the following methods:

- Web UI: Manual file uploads for small data loads.

- SnowSQL CLI: Command-line tool for large-scale and automated loading.

- COPY INTO Command: SQL command to load data from external stages like Amazon S3, Azure Blob, or Google Cloud Storage.

- External Tools: Integration with ETL tools like Informatica, Talend, Apache NiFi, etc.

Supported File Formats

– CSV

– JSON

– AVRO

– ORC

– PARQUET

– XML

Loading Data Using COPY INTO

- Create a table (we can create using command or gui)

create table FRUIT_OPTIONS(FRUIT_ID number,FRUIT_NAME varchar(25))

- Create a file format (if not already created):

create file format smoothies.public.two_headerrow_pct_delim

type = CSV,

skip_header = 2,

field_delimiter = ‘%’,

trim_space = TRUE ;

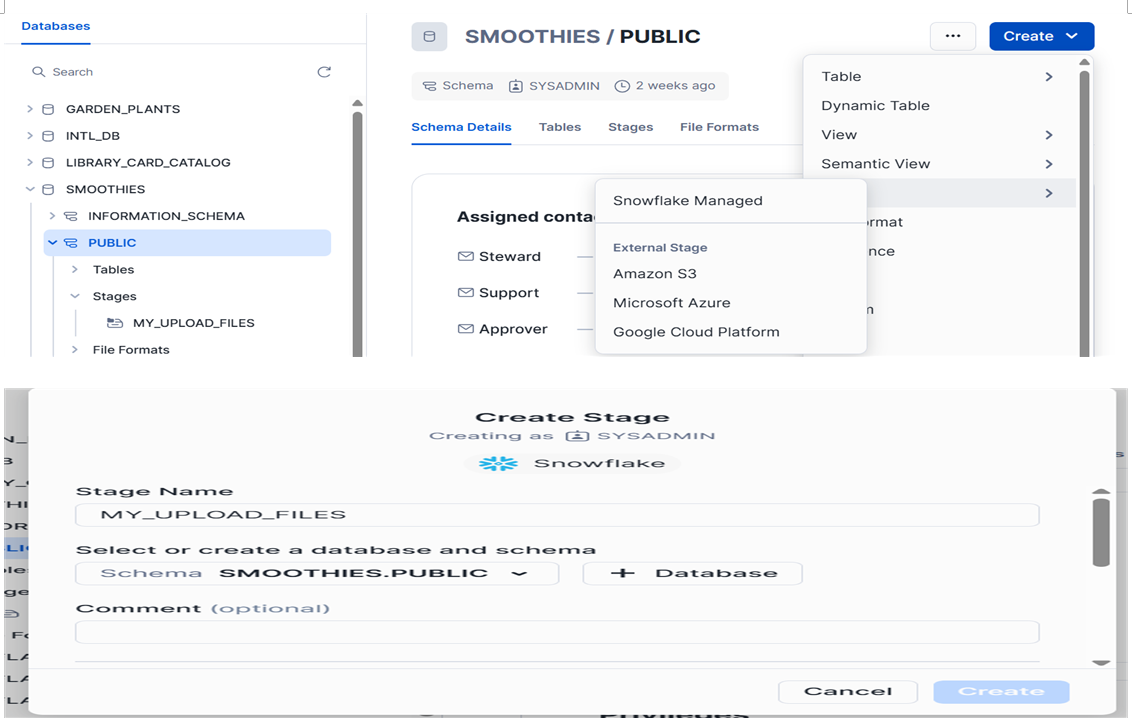

- Create stage: Provide name for stage and click create.

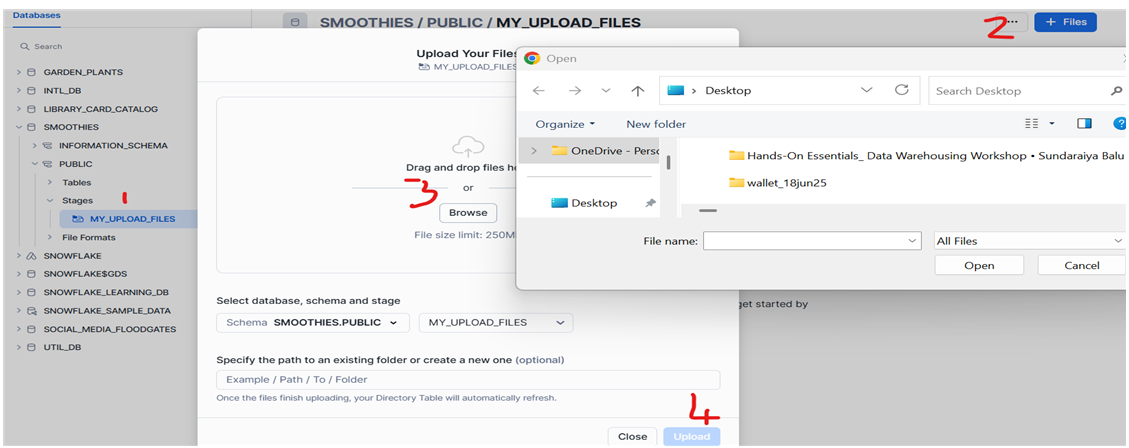

- Load .csv file into stage

- Load data into the table using copy into command:

COPY INTO smoothies.public.fruit_options @smoothies.public.my_upload_files

files = ( ‘fruits.csv’)

file_format = (format_name = smoothies.public.two_headerrow_pct_delim)

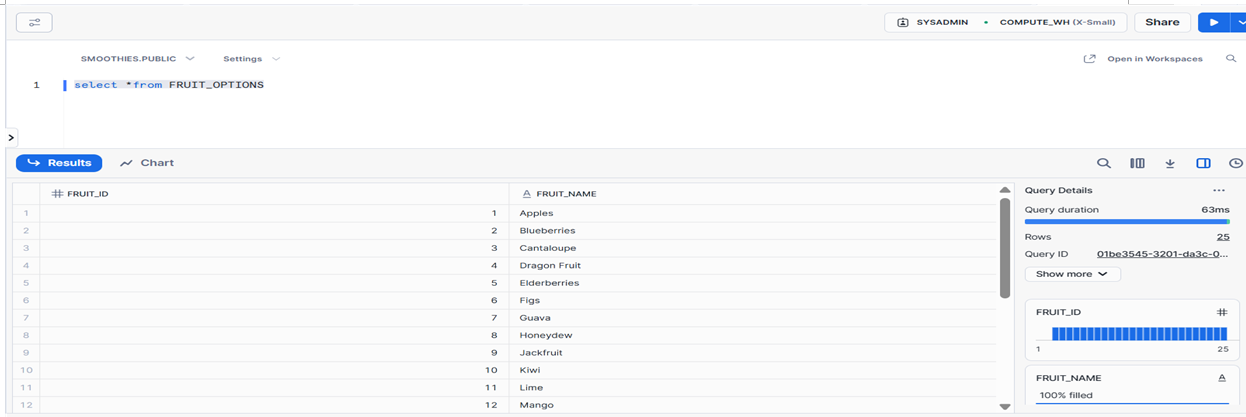

- check the data from table

Conclusion

Snowflake makes data loading flexible and efficient, with support for multiple formats and locations. Using the COPY INTO command or Web UI, users can reliably ingest data into Snowflake for further analysis.